2 min read

Autonomous AI Agent for End-to-End Component Data Extraction

1. Objective Streamline complex, error-prone manual data entry Reallocate engineering talent to high-value innovation Achieve end-to-end automation...

1 min read

Press

Updated on August 4, 2025

AI and large-language-model clusters are straining the limits of traditional fat-tree and star networks. When tens of billions of parameters move across 224 Gbps links, the switch tiers, cable count, and power draw all climb sharply. Our newest white paper explains how a 3D Torus rack-level fabric trims hop counts, shortens cable runs, and reduces switch silicon while preserving the low latency and massive bandwidth required by modern XPU fleets.

Inside the paper you will find head-to-head benchmarks of 3D Torus against Fully-Connected, Tree, and Dragonfly designs under tensor- and pipeline-parallel training. Detailed latency heat maps, watt-per-teraflop savings, and bill-of-materials comparisons show why a small-diameter torus consistently outperforms sprawling Clos fabrics. The guide also covers 224 Gbps SerDes layout, efficient rack wiring, and fault-tolerant routing so that architects can scale cleanly from a single rack to exascale pods.

Do not let yesterday’s network architecture throttle tomorrow’s models. Download the full white paper today to blueprint a 3D Torus topology that accelerates training, lowers power budgets, and cuts total cost of ownership for your AI infrastructure.

2 min read

1. Objective Streamline complex, error-prone manual data entry Reallocate engineering talent to high-value innovation Achieve end-to-end automation...

1 min read

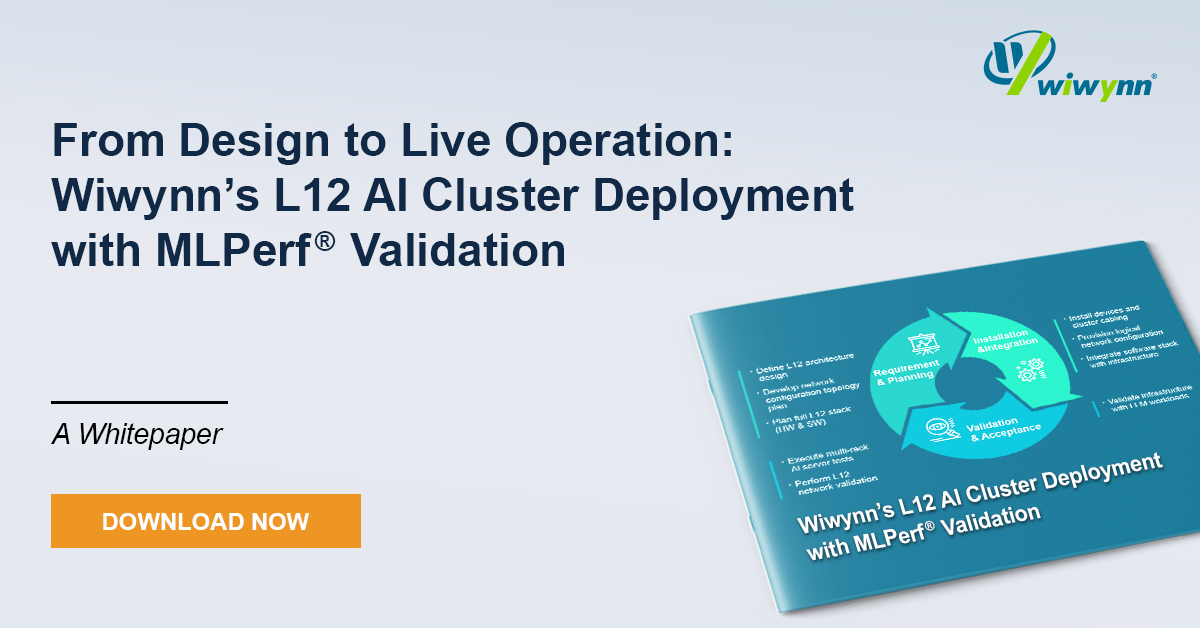

Deploying large-scale AI clusters introduces engineering challenges that extend well beyond the individual server rack. From liquid cooling...

1 min read

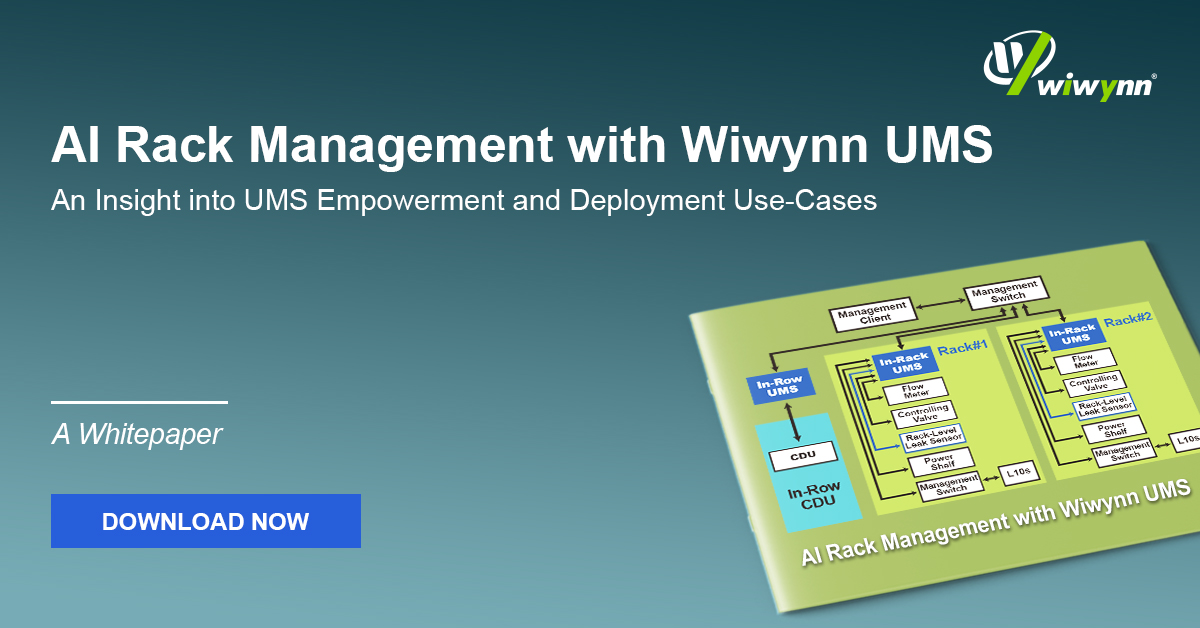

This paper discusses the rapid expansion of AI workloads and the resulting transformation in data center infrastructure requirements. Traditional...