2 min read

Autonomous AI Agent for End-to-End Component Data Extraction

1. Objective Streamline: complex, error-prone manual data entry Reallocate: engineering talent to high-value innovation Automation: Achieve...

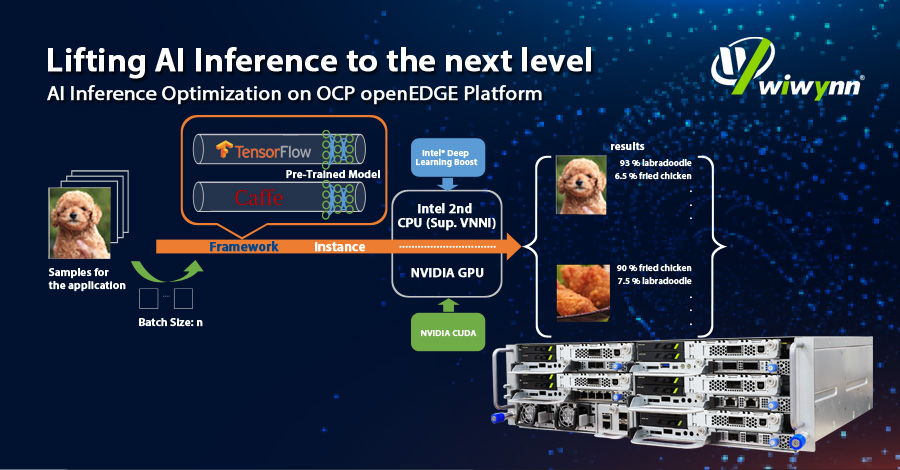

Looking for Edge AI Server for your new applications? What’s the most optimized solution? What parameters should take into consideration? Come to check Wiwynn’s latest whitepaper on AI inference optimization on OCP openEDGE platform.

See how Wiwynn EP100 assists you to catch up with the thriving edge application era and diverse AI inference workloads with powerful CPU and GPU inference acceleration!

Leave your contact information to download the whitepaper!

2 min read

1. Objective Streamline: complex, error-prone manual data entry Reallocate: engineering talent to high-value innovation Automation: Achieve...

1 min read

Deploying large-scale AI clusters introduces engineering challenges that extend well beyond the individual server rack. From liquid cooling...

1 min read

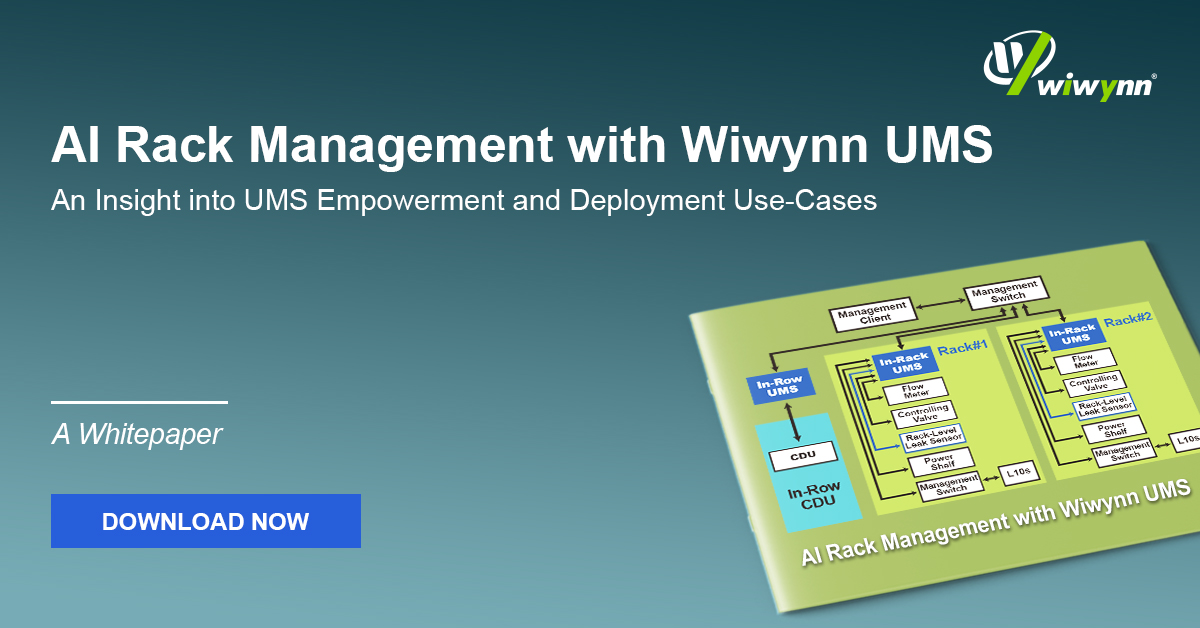

This paper discusses the rapid expansion of AI workloads and the resulting transformation in data center infrastructure requirements. Traditional...