White Paper: Wiwynn Management Solution for Advanced Cooling Technology

In an era where computing speeds are skyrocketing, the hunt for cooling technologies that can keep up is on. Enter the world of liquid and immersion...

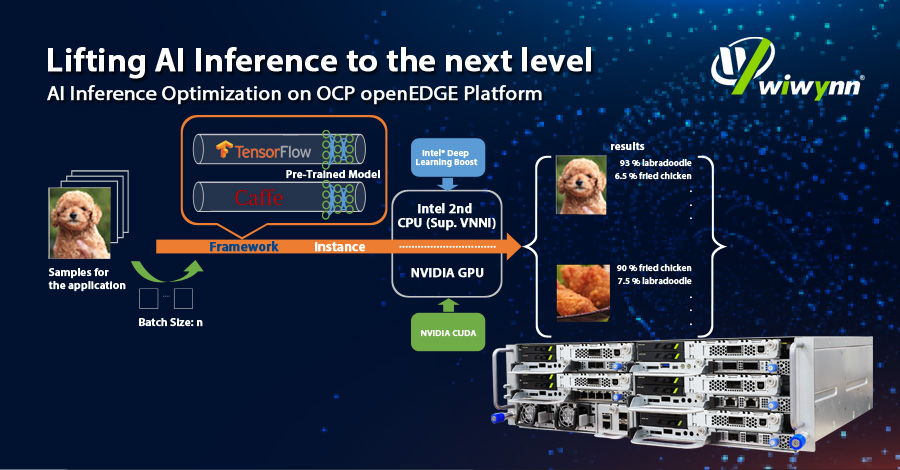

Looking for Edge AI Server for your new applications? What’s the most optimized solution? What parameters should take into consideration? Come to check Wiwynn’s latest whitepaper on AI inference optimization on OCP openEDGE platform.

See how Wiwynn EP100 assists you to catch up with the thriving edge application era and diverse AI inference workloads with powerful CPU and GPU inference acceleration!

Leave your contact information to download the whitepaper!

In an era where computing speeds are skyrocketing, the hunt for cooling technologies that can keep up is on. Enter the world of liquid and immersion...

The O-RAN Alliance's new network architecture revolutionizes 5G and B5G by disaggregating RAN functions and automating performance control,...

The O-RAN Alliance, formed by global CSPs, is revolutionizing RAN technology towards an open, standardized ecosystem, moving away from traditional...